MOVE is a comprehensive software suite that was developed from scientists for scientists in order to

improve, speed-up and standardize motion related data analysis.

So what does it make unique?

-

Recording

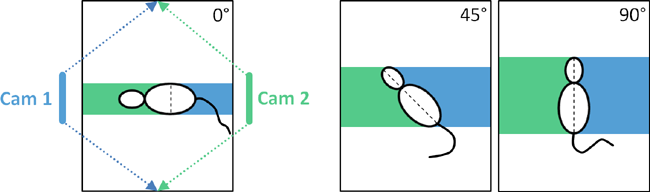

Using two oppositely located, horizontal cameras for the surveillance of a single object ensures a

close view on the object's head at any point in time and independent of its current orientation.

Consequently time intervals of slight head movements (e.g. sniffing) are much easier to distinguish

from freezing periods (Meuth et. al., 2013).

Therefore MOVE allows synchronized video recordings from two arbitrary video sources in parallel. By

supporting even cost-effective HD webcams connected via USB any given setup can be easily expanded

without endangering ongoing projects.

-

Motion analysis

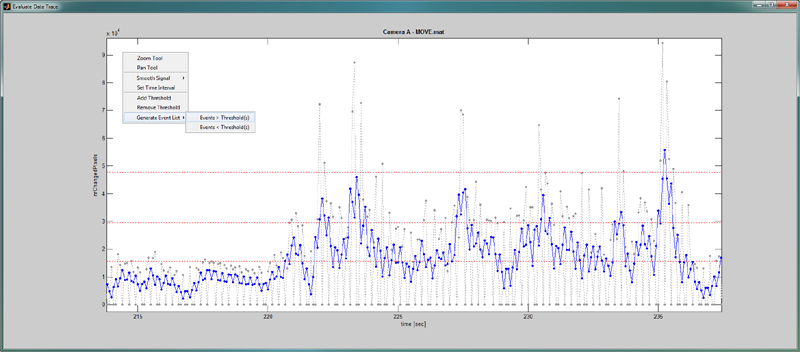

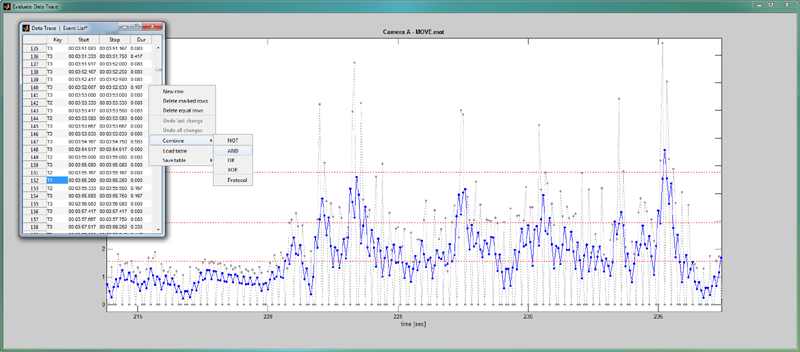

Videos recorded by MOVE or any other software can be analyzed in MOVE via an unsupervised batch

process that quantifies the observed object's motion over time. The resulting motion trace can be

further subdivided into multiple motion levels representing e.g. immobility, sniffing, grooming and

exploration in order to generate a corresponding standard-compliant event list. Combining an event

list with the experiment's stimulation protocol automatically generates an overview that shows which

event / key / behavior was presented to which extent in each single phase of the protocol.

-

Multidimensionality

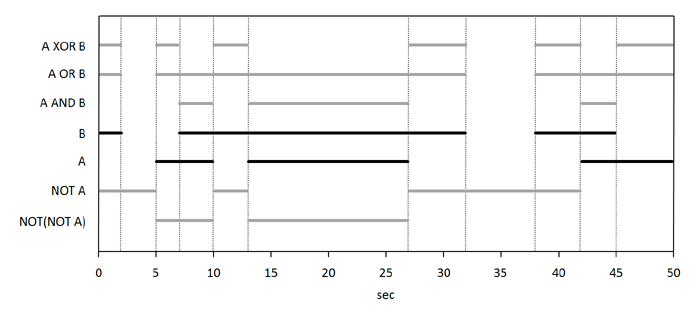

If two eyes see more than one it is logical that they can't see exactly the same. So how to combine

multiple data sources like e.g. two oppositely located video cameras (2D) or these two cameras plus

EEG and heart rate (4D)? For this purpose MOVE provides the Boolean algebra operators AND, OR, XOR

and NOT to be applied to any given pair of event lists.

Imagine each solid line in the given example graph represents a continuous freezing event reported by

two cameras A and B. Since A and B are not perfectly matching there must have been situations where

one camera has missed e.g. slight head movements during sniffing while the other one had a clear view

on that. In this case the final freezing event list should solely comprise those time periods when

both cameras stated freezing or in other words the solid lines overlap. This can be accomplished by

simply combining event list A and B with the AND operator (see "A AND B").

Let A now represent the harmonized freezing result of camera A and B of the previous example and B

represent phases of increased heart rate. Then the repeated application of the AND operator results

in a potentially more accurate freezing event list A AND B that already combines three different data

sources (3D) but still remains open to further expansion.

The last example should reveal the difference between the manual freezing score of an experienced

observer (A) and the semi-automated MOVE equivalent (B). By combining both event lists via the XOR

operator the resulting event list (see "A XOR B") comprises exactly those time periods when

solely one of the two event lists stated freezing but not both.

-

Keylogger

Key events in MOVE can be logged either in reference to the time scale of a playing video file or the

system clock in case e.g. the camera preview serves as video source. One can toggle on the fly between

the regular operation mode and the lock mode in which keys are automatically kept pressed until

another key gets pressed (starting a new event) or the same key gets pressed again (ending the current

event).

You are interested and want to give it a try? Then please follow the few registration steps

described

here.

PEAK is another in-house development

that could perfectly complement MOVE by providing a comprehensive mathematical analysis of the motion

trace.

References

Expression of freezing and fear-potentiated startle during sustained fear in mice.

Daldrup T, Remmes J, Lesting J, Gaburro S, Fendt M, Meuth P, Kloke V, Pape HC and Seidenbecher T.

Genes Brain Behav. 2015 Mar;14(3):281-91.

Standardizing the analysis of conditioned fear in rodents: a multidimensional software approach.

Meuth P, Gaburro S, Lesting J, Legler A, Herty M, Budde T, Meuth SG, Seidenbecher T, Lutz B and Pape HC.

Genes Brain Behav. 2013 Jul;12(5):583-92.

Screen shots

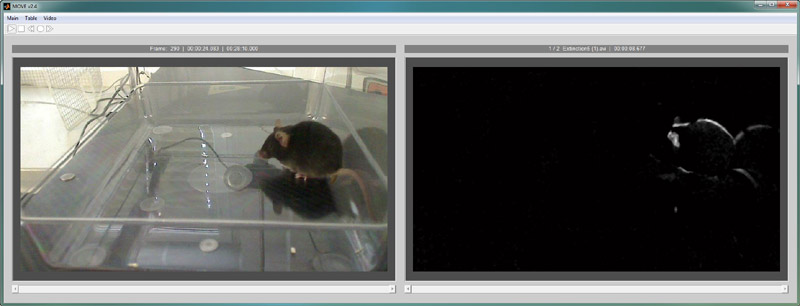

Dual camera view - Two oppositely located, horizontal cameras allow a clear view on the object's

head at any point in time.

Motion analysis - Motion gets quantified in terms of the total number

of pixels that significantly changed between the currently regarded video frame (left panel) and its predecessor.

These pixels are visualized as gray scale image (right panel) during the batch process.

Event list - A standard-compliant event list can be easily generated by dividing

(red dashed lines)

the given motion trace in different motion levels (representing e.g. immobility, sniffing, grooming and

exploration) and selecting the option "Generate Event List" from the right-click context menu.

Multidimensionality - The Boolean algebra operators AND, OR,

XOR and NOT allow the combination of an arbitrary number of event lists (data sources) in order to

reach the most accurate results possible.